A tale of two ZFS Servers, or how I ended up using two HP Microservers for Storage, Virtualization, Containers, Home Automation, you name it ...

Introduction

I bought the house I am currently living in back in 2004, and during the initial renovation I took pains to run multiple lines of cat6 cable to all rooms from a single 'utility rack'.This has guaranteed me a high level of flexibility when deciding what kind of servers/services to run during all these years.

One of the few rules I have been trying to stick to is that anything that I would use on a continuos base (i.e: powered on 24x7x365) would necessarily have to have a low power draw.

My personal limit for always on peripherals in the house has increased with the years, due to the increase of automation and media devices, but I have been always able to keep it under the 200WH mark.

During these 15 years I have gone through multiple iterations of the hardware and services running in the house, always keeping in mind the balance between having my data stored in a reasonably safe way, my internet access available most of the time, and my ability to connect/work from home has been benefiting from these 'architectural' decisions.

This changed three years ago when my studio became my main work environment, so I doubled my DSL connection to have more availability in case of faults, I upgraded the hardware in my firewall to more easily replaceable components, but still kept using a single NAS for everything else

Pre-Update

The hardware that was running 24x7 up to my last rework was:- Cisco SG-200 24 port switch

- 2x Ubiquity AC-LR-PRO for Wifi coverage of the house and the yard

- 2x Raspberry pi2

- Gas burner automation

- Volumio Media Player

- 1x Raspberry pi1B (Home Energy Consumption, solar PV energy production, RFM01 sensors receiver)

- 1x Micro-Atx board + Intel I350T4 nic running Pfsense as firewall

- 1x VDSL modem

- 1x WIFI WAN AP

- 1x Synology DS713+ with additional expansion unit (DX513) running a total of 7 3.5" HDDs

- 16TB of SHR Volumes

- 2TB of RAID1 Volumes

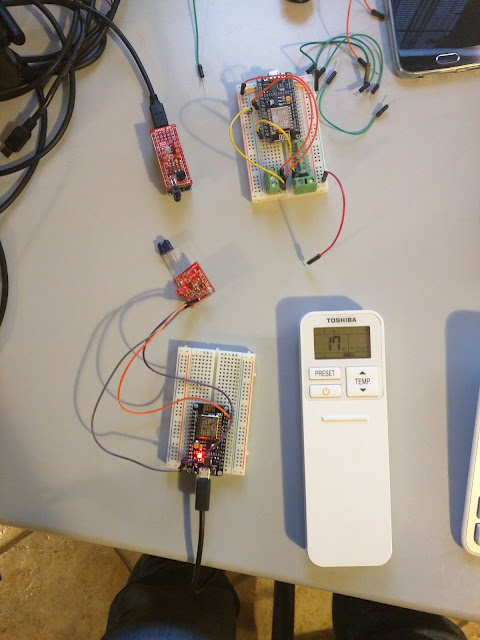

- Multiple ESP8266 nodes scattered around the house pulling automation duty like

- gate opener

- A/C controller

- pool controller

- Christmas lights

- remote raspberry hard reset

- a RFM01 capable Arduino controlling two sump pumps and pulling flood detection duty

- 1x Amazon FireTv

- 2x Desktop PCs (OSX)

- 4x Laptops

- 2x Android Tablets

- 2x Ipads

- Various smartphones

All this hardware was supporting the following services:

- Dual WAN service to all House devices

- NAS Service to all House devices

- AFP/Timemachine backup server for all Apple computers

- File/Share Based sync for OSX/Windows clients (Synology CloudSync)

- Backup of Photos to Amazon Cloud Storage service

- ISCSI LUN provider (all Raspberry Pis boot from LAN using an ISCSI LUN as storage)

- OpenHab Home automation system

- Mosquitto MQTT server

- PLEX Media Server

- Mysql Server

- REDIS Server

- EmonCMS energy monitoring platform

- Node-RED automation platform

- Internal Mail Server

- Influxdb Time Series server

- Grafana/ELK server

All the services except the firewall were running on the Synology, using a combination of Apps and Docker containers. With 4GB of available RAM and a 2 core Atom processor the Synology was always short of compute power (no transcoding possible in Plex, Mosquitto sometimes dropping messages due to high cpu/swapping) and memory: depending on the combination of containers running the system would swap and slow to a crawl

Because of that, and because of the need to get some sort of redundancy going for the storage and connectivity part of what has de facto become my main work environment, I started looking into options to accomplish that.

Choices, choices, choices

Software

I ruled out another Synology appliance primarily because of cost, and also because of limited options in terms of hardware expandability. Also, with the current drive capacity, Synology custom raid is not really an option anymore (Synology itself disables it on their business appliances).I also ruled out a rackmount server, primarily because of power draw, and started looking into low/moderate power options with real server capabilities.

I entertained for a bit the notion of running Xpenology, a hack that enables the Synology firmware to be run on non-Synology hardware, but a couple of experiments were sufficient to rule it out: I didn't want my storage and services to be wiped out because of a failed hacked firmware update. Also, the added value of the Synology platform during the last years has decreased because a lot of other options have become viable in the meantime

Another factor in choosing the software has been my exposure to ZFS based appliances, in their Oracle incarnation (ZFS storage over FC/Infiniband/Ethernet) and in their lesser variant (FreeNAS/BSD based): you quickly get used to having a lot of functionality and performance, and it is difficult to let it go once you've tasted it.

Given my power (and budget) constraints, I chose FreeNAS as my main driver for the SAN and virtualization part.

These are the FreeNAS provided components I am using to provision all the services in my setup:

- NFS/SMB/AFP File server

- Time Machine Backups

- Bhyve Hypervisor for running Linux/Docker and FreeBSD VMs

- Docker

- PFSense

- Iocage Jails for running Freebsd supported services

- Plex Media Server

- Syncthing (substitute for Synology Backup)

- Transmission

- Syncovery (substitute for Synology Cloud Backups)

- ZFS Snapshots

- ISCSI LUNs

Hardware - HP Microserver Gen8

Considering my power draw requirements, and the CPU/RAM requirements I found a good match ino the HP Microserver line, and got interested in the second to last generation, or Gen8 server:

The Microserver Gen8 is a very small form factor server, that still manages to factor in an HP ILO Management interface, 16GB of ECC RAM, and the option to upgrade the standard Atom processor to a Xeon model (albeit not from the latest generations) while keeping the TDP below 60W. It also comes with 4 Hot Swap drive cages, options to expand them further with an internal PCIE card, and two Intel Gigabit NICs, it also has an internal USB and SD slot for booting things like ESXi off it.

I ended up buying two of them (one new, one secondhand) and expanded both to 16GB RAM and a Xeon E3-1265L V2@ 2.50Ghz. I also used the available internal space to add some SSDs to the mix (Velcro straps to te rescue)

This is the hardware that is still running post the update:

(New Hardware)

- 1x HP Microserver Gen8

- 16GB ECC RAM

- Xeon E3-1265L V2@ 2.50Ghz

- 1x LSI SAS 9212-4i4e Controller

- 2x 60GB SSD (OS)

- 2x480GB SSD (SSD POOL)

- 1x120GB SSD (HD pool Cache)

- 2x10TB WD Red HDDs (HD POOL in RAID1)

- 1x HP Microserver Gen8

- 16GB ECC RAM

- Xeon E3-1265L V2@ 2.50Ghz

- 1x 800GB Nytro WarpDrive BLP4-800

- 1x OS drive

- 2x SSD POOL

- 1x HD Pool Cace

- 3x6TB WD Red HDDs (HD Pool RAIDZ1)

- Cisco SG-200 24 port switch

- 2x Ubiquity AC-LR-PRO for Wifi coverage of the house and the yard

- 2x Raspberry pi2

- Gas burner automation

- Volumio Media Player

- 1x Raspberry pi1B (Home Energy Consumption, solar PV energy production, RFM01 sensors receiver)

- 1x VDSL modem

- 1x WIFI WAN AP

- Multiple ESP8266 nodes scattered around the house pulling automation duty like

- gate opener

- pool controller

- Christmas lights

- remote raspberry hard reset

- a RFM01 capable Arduino controlling two sump pumps and pulling flood detection duty

- 1x Amazon FireTv

- 2x Desktop PCs (OSX)

- 4x Laptops

- 2x Android Tablets

- 2x Ipads

- Various smartphones

My original idea was to keep one of the two microservers running, and the other acting as cold standby in case of problems, mainly for power draw reasons, while keeping a physical PFSense firewall running. After experimenting with the platform, I ended up ditching the physical firewall, and configuring the second microserver as hot standby for backups, primary server for Timemachine Backups, and primary server for running a virtualised instance of PFSense. In case of one of the microserver failing, the other one has enough resources to run all the virtualized components without requiring additional resources.

FreeNAS

The FreeNAS platform has grown during the past years both in terms of available features and in terms of user friendliness. You are still required to be knowledgeable about a LOT of stuff in order to use it as a daily driver. The Synology interface and ecosystem is way more evolved, but at the same time it is lacking the simplicity of ZFS managed storage. Here are a couple of screenshots of the main dashboard and interface

Storage/Backups

The main FreeNAS server is used for hosting a Docker VM, multiple Jails (Plex, Syncthing, Transmission) and for providing all file services to the Home LAN. Both Pools are snapshotted once every two hours and backed up to the secondary FreeNAS server

HPMICRO-01 Pools

- 480GB SSD Pool (RAID1)

- Docker VM

- Docker Volumes

- Jails

- ISCSI LUNs for raspberry Pis

- 10TB HD Pool (RAID1)

- Media Files

- Photos

- Work files

HPMICRO-01 Snapshots

Once every two hours both pools are snapshotted, snapshots are kept for 4 weeks

HPMICRO-01 Replication Tasks

The ZFS snapshots are continuously replicated to the secondary Freenas Server

HPMICRO-02 Pools

- 360GB SSD Pool (RAID0)

- FreeBSD VM

- Jails

- 12TB HD Pool (RAIDZ1)

- HPMICRO-01 Backup

- Time Machine Backups

Virtual Machines

FreeNAs uses the bhyve hypervisor, that is able to run both Linux and FreeBSD guests

Docker VM

Used to run all Linux workloads, volumes are mapped to a ZFS dataset, that is included in the standard snapshot/backup cycle

I use Portainer to manage the Docker container estate:

PFSENSE VM

I decided to go virtual on the main firewall as well, I know it is not regarded as a 'safe' choice from the security point of view, as if someone manages to gain access to the firewall VM, he/she has a much wider attack surface and an easier path to my main servers, but it is a risk that i willingly accept in the name of sawing 20/30W off the physical firewall I was running.The Bhyve VM can handle VLAN tagged interfaces, and I am taking advantage of that for running the WAN traffic (and the storage sync tasks) off a single physical NIC. The only issue I have noticed is that because of some quirk in either the freebsd kernel or the vtnet driver, the max transfer over a single virtual net interface is limited to 500Mbit/seconds (as opposed to the full 1Gbit/s that should be theoretically possible)

Final Thoughts

Both servers are now averaging around 100W of power draw, an increase of about 10W over the previous Synology/Hardware firewall combination, but provide a whole new level of capabilities, and a local backup that can be readily available in case of one of the two servers failing.In addition, I now also have complete remote management capabilities (the servers are in a not easily reachable location) and monitoring if needed.

The Microserver is really a piece of engineering work (my HP contacts point out it's probably due to Compaq people still being in charge of Proliant hardware design). I also managed to get even more comfortable working with Jails/Freebsd (and that is a good thing in my book) and had a lot of fun.

Total budget for the project was about 1000EUR for the hardware (2x microservers, 2x Xeon processors, 2x 16GB ECC Memory expansion, additional LSI controller) and about 500EUR for the storage (I already had most of the SSDs and the 6TB HDs). I also managed to recover about 500EUR by selling the synology NAS, so all in all it wasn't too costly, considering the quality of the hardware and the new capabilities of the system.

The most important part: it kept my brain busy for the most part of three months, and that is not an easy feat :)

Commenti

Thanks for sharing!!!

Posta un commento